GPU Accelerated NetworkX Backend

Try Zero Code Change Accelerated NetworkX

Zero Code Change Acceleration

Write your code with the full ease of NetworkX. It's as easy as setting a single environment variable to begin accelerating your code on the GPU.

Run the same code on CPU and GPU

The same NetworkX code runs on CPU, and even faster on GPU - no need to have separate workflows or conditional imports.

Unlock large graph analysis in NetworkX

50x - 500x speedups, depending on algorithm and graph size. You can finally use NetworkX for large, real-world sized graph data.

First-class support in NetworkX

GPU acceleration is achieved through the nx-cugraph backend and NetworkX function dispatching. This comes with the benefit of support from the NetworkX community.

Bringing GPU Accelerated graph analytics

to every NetworkX User

Bring blazing fast performance to your graph workflows.

How to Use It

To accelerate NetworkX code, simply install nx-cugraph and enable it in NetworkX.

pip install nx-cugraph-cu13 --extra-index-url https://pypi.nvidia.comFrom the shell, set an environment variable:

NX_CUGRAPH_AUTOCONFIG=True

python my_networkx_script.pyFrom a notebook, use %env before importing NetworkX:

%env NX_CUGRAPH_AUTOCONFIG=True

import networkxSee the NetworkX documentation for additional ways to enable and use backends.

Learn more about these benchmark results in the Announcement Blog and nx-cugraph documentation.

* Dataset: cit-Patents | 3.7M Nodes, 16.5M Edges

GPU: NVIDIA A100, 80 GB

CPU: Intel Xeon W9-3495X (Sapphire Rapids)

* Dataset: soc-livejournal1 | 4.8M Nodes, 69M Edges

GPU: NVIDIA A100, 80 GB

CPU: Intel Xeon W9-3495X (Sapphire Rapids)

** BC = Betweenness Centrality with k=100

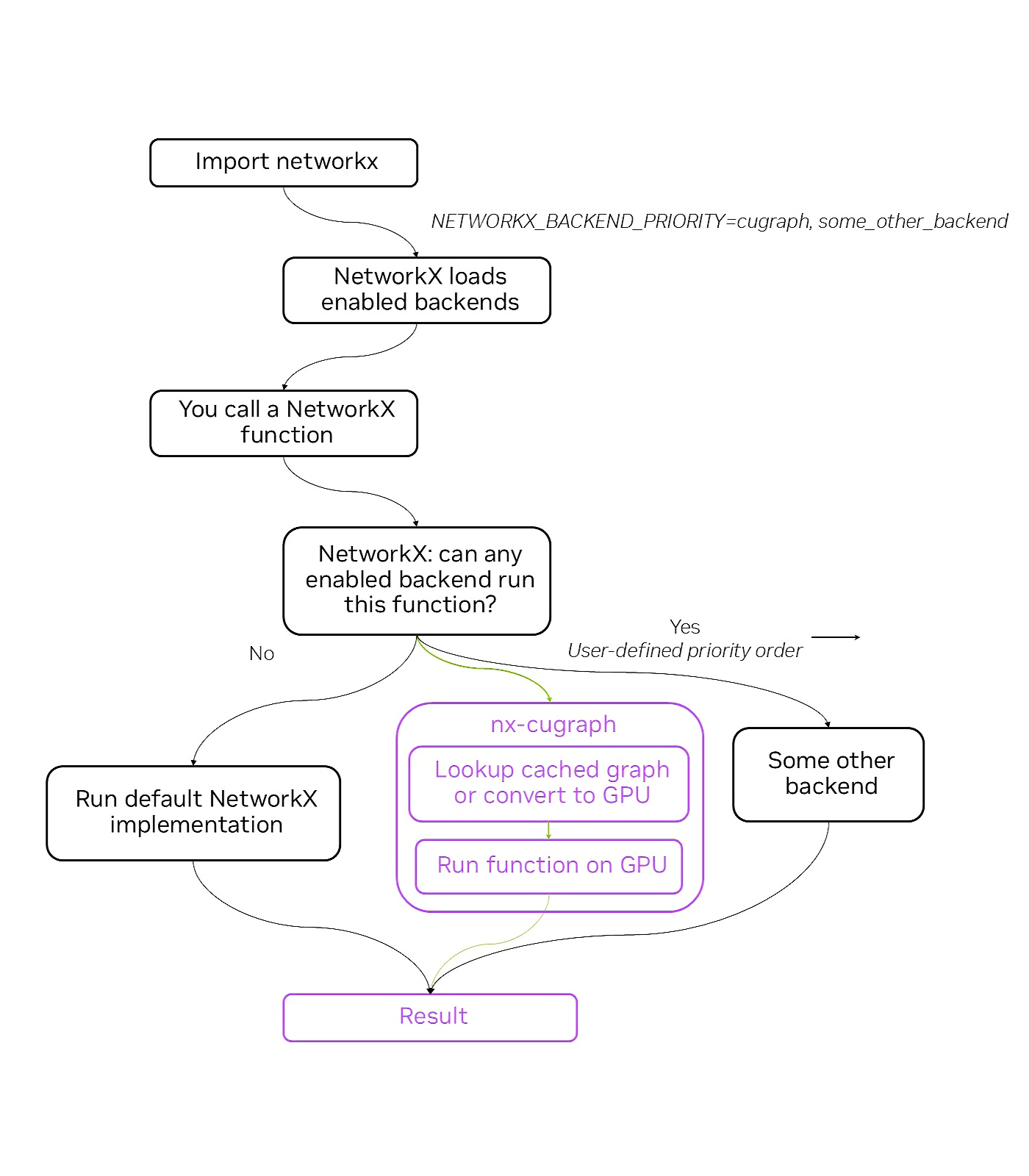

How It Works

Under the Hood

NetworkX has the ability to dispatch function calls to separately-installed third-party backends. These NetworkX backends let users experience improved performance and/or additional functionality without changing their NetworkX Python code. Examples include backends that provide algorithm acceleration using GPUs, parallel processing, graph database integration, and more.

While NetworkX is a pure-Python implementation, backends may be written to use other libraries and even specialized hardware. As such, nx-cugraph is a NetworkX backend that uses RAPIDS cuGraph and NVIDIA GPUs to significantly improve NetworkX performance.

In a demo script

demo.ipy

import networkx as nx

import pandas as pd

url = "https://data.rapids.ai/cugraph/datasets/cit-Patents.csv"

df = pd.read_csv(url, sep=" ", names=["src", "dst"], dtype="int32")

G = nx.from_pandas_edgelist(df, source="src", target="dst")

%time result = nx.betweenness_centrality(G, k=10)

user@machine:/# ipython demo.ipy

CPU times: user 7min 36s, sys: 5.22 s, total: 7min 41s

Wall time: 7min 41s

user@machine:/# NX_CUGRAPH_AUTOCONFIG=True ipython demo.ipy

CPU times: user 4.14 s, sys: 1.13 s, total: 5.27 s

Wall time: 5.32 s

*NetworkX 3.4.1, nx-cugraph 24.10, CPU: Intel(R) Xeon(R) Gold 6128 CPU @ 3.40GHz 45GB RAM, GPU: NVIDIA Quadro RTX 8000 50GB RAM

Execution Flow

Try nx-cugraph Yourself

Get more resources and connect with developers.

Try Now

See how nx-cugraph can speedup your graph workflows in a free GPU-enabled Colab notebook environment using your Google account.

Additionally, nx-cugraph is now included in the RAPIDS metapackage. Visit the RAPIDS Quick Start to get started with RAPIDS on your favorite platform.

Learn More

Now nx-cugraph is generally available (GA) and ready for wide use. You can learn more from the release blog and nx-cugraph documentation.Interested in seeing nx-cugraph used in an end-to-end workflow? Try this SciPy conference demo notebook. Want to contribute or share feedback? Reach out via GitHub.