Ecosystem

An ecosystem of hardware,

software, and developers

Hardware

RAPIDS runs on hardware powered by CUDA, the fundamental set of tools for GPU computing built by NVIDIA. RAPIDS works on PCs, workstations, data centers, and the cloud. Learn more about the different types of hardware and find the best fit for your use case on NVIDA's product page for Data Science Hardware

Software

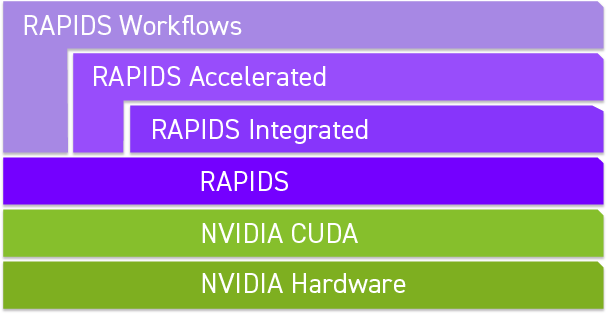

Set on a foundation of powerful hardware and parallelized by CUDA, the RAPIDS ecosystem is based on core projects, those extending its capabilities, and those built on top of RAPIDS.

Jump to Section

Developers

We hope you will consider integrating RAPIDS into your solution or workflow. RAPIDS is built for Python and C++ developers alike. Build something great with RAPIDS.

Jump to SectionFeatured RAPIDS Libraries

Featured components of the RAPIDS ecosystem.

cuDF

cuDF is a library for data science and engineering designed for people familiar with the pandas API. Use cuDF in the new pandas Accelerator Mode to speed up pandas workflows with zero code change or use the classic GPU-only mode to unlock maximum performance on dataframes.

GitHubDocumentation

Roadmap

libcudf

libcudf provides the CUDA C++ building blocks powering cuDF.

GitHubDocumentation

cuML

cuML is a library for executing machine learning algorithms on GPUs with an API that closely follows the scikit-learn API. Reduce the time to fit your model from hours or minutes to seconds.

GitHubDocumentation

libcuml

libcuml provides the CUDA C++ building blocks powering cuML.

GitHubDocumentation

cuGraph

cuGraph is a library to make graph analytics available to everyone. With a Python API that follows NetworkX, you can analyze networks of millions of nodes without specialized software faster than ever.

GitHubDocumentation

libcugraph

libcugraph provides the CUDA C++ building blocks powering cuGraph.

GitHubDocumentation

cuSpatial

cuSpatial is a library for lightning-fast spatial analytics. Fully integrated with GeoPandas and growing fast, this toolkit will feel familiar to Python users of GIS tools.

GitHubDocumentation

Roadmap

libcuspatial

libcuspatial provides the CUDA C++ building blocks powering cuSpatial.

GitHubDocumentation

cuVS

The cuVS library contains optimized algorithms for approximate nearest neighbors and clustering, along with many other essential tools for accelerated vector search. Integrate cuVS into any vector database or vectorized data application for optimized speed and efficiency in information retrieval.

GitHubDocumentation

cuVS Page

RAFT

RAFT contains CUDA accelerated primitives for rapidly composing analytics, and is used by other RAPIDS libraries. Its building blocks include dense algebra, sparse algebra, spatial computations, clustering, solvers, and statistics.

GitHubDocumentation

kvikIO

Take full advantage of GPU Direct Storage (GDS) through powerful bindings to cuFile.

GitHubDocumentation

libkvikio

libkvikio provides the CUDA C++ building blocks powering kvikIO.

GitHubDocumentation

cuxfilter

Quickly visualize and filter through large datasets, with a few lines of code, using best in class charting libraries.

GitHubDocumentation

Roadmap

cuCIM

Greatly accelerate your computer vision and image processing tasks, especially for biomedical imaging. The cuCIM API mirrors scikit-image for image manipulation and OpenSlide for image loading.

GitHubDocumentation

Roadmap

Many More...

Visit our GitHub organization page or our documentation for a more extensive list of projects.

RAPIDS Integration

Projects and services that have RAPIDS acceleration as an added feature.

Spark RAPIDS

NVIDIA is bringing RAPIDS to Apache Spark to accelerate ETL workflows with no code change, improving performance, and reducing total costs.

GitHubDocumentation

Dask

Scale out your workload onto multiple GPUs and multiple machines with Dask. RAPIDS also develops the Dask-CUDA library for GPU-friendly cluster deployment

GitHubDocumentation

XGBoost

XGBoost is the gold standard in single model performance for classification and regression. RAPIDS was built from the start to integrate with this amazing algorithm.

GitHubDocumentation

Visualization

RAPIDS integrates with popular and powerful visualization projects, such as Plotly Dash, HoloViz, Bokeh, and more.

RAPIDS Visualization Guide

Cloud / Deployment

RAPIDS is built to be fast where ever it runs. Learn how to run RAPIDS on all the major cloud providers, with open-source Hyperparameter Optimization tools, and High Performance Computing like Slurm.

Deployment DocumentationRAPIDS Accelerated

Cutting edge software built with RAPIDS.

Triton

Triton Inference Server, part of the NVIDIA AI platform, streamlines and standardizes AI inference by enabling teams to deploy, run, and scale trained AI models from any framework on any GPU or CPU-based infrastructure.

GitHubLearn More

NeMo Curator

NeMo Curator is a Python library designed for scalable and efficient dataset preparation, enhancing LLM training accuracy through GPU-accelerated data curation using Dask and RAPIDS. It offers a customizable and modular interface that simplifies pipeline expansion and accelerates model convergence by preparing high-quality tokens.

GitHubLearn More

cuOpt

NVIDIA cuOpt is an operations research optimization API using AI to help developers create complex, real-time fleet routing. These APIs can be used to solve complex routing problems with multiple constraints and deliver new capabilities, like dynamic rerouting, job scheduling, and robotic simulations, with subsecond solver response time.

Learn MoreMorpheus

NVIDIA Morpheus is an open application framework that enables cybersecurity developers to create optimized AI pipelines for filtering, processing, and classifying large volumes of real-time data.

GitHubDocumentation

Clara

NVIDIA Clara is a platform of AI applications and accelerated frameworks for healthcare developers, researchers, and medical device makers creating AI solutions to improve healthcare delivery and accelerate drug discovery.

Learn MoreDocumentation

MONAI

RAPIDS cuCIM has been integrated into the MONAI Transforms component to accelerate the data pathology training pipeline on GPU.

GitHubProject Page

Merlin

NVIDIA Merlin is an open source library providing end-to-end GPU-accelerated recommender systems. Merlin leverages RAPIDS cuDF and Dask cuDF for dataframe transformation during ETL and inference, as well as for the optimized dataloaders in TensorFlow, PyTorch or HugeCTR to accelerate deep learning training.

GitHubLearn More

RAPIDS Workflows

Complex pipelines that utilize RAPIDS components.

Data Science Workflows

Integrating and combining RAPIDS libraries forms powerful workflows that optimize data science pipelines. Learn more about real world workflows and featured success stories in our Use Cases Section

MLOps Guides

Deploy and maintain RAPIDS in production environments with these machine learning operations, hyper parameter optimization, and integration guides on our Cloud ML Examples Repository and Deployments Page

Developers

Guides and resources for RAPIDS developers.

Maintainer Guides

RAPIDS projects stay cohesive thanks to detailed maintainer guides and ops procedures. Look up release schedules, get CI help, and stay up to date on our Maintainer Documentation Page

Contribution Guides

Open source projects stay healthy with active contributions. Overall contribution guides can be found on our Documentation. Each RAPIDS repository also has helpful and detailed contributing guides, such as cuDF's example. Check out each project's README and documentation for their specific requirements in the RAPIDS Repositories